August 31, 2025

I packaged several models as MCP tools and ran them with Claude Code and BlenderMCP to make a scene generation agent.

Model Context Protocol is a mechanism for surfacing additional tools to LLMs. As a large language model, Claude is aware of Blender as a concept, but can't natively interact with the program. MCP lets us surface arbitrary tools for Claude to interact with, enabling the development of more powerful and autonomous agents.

The popular BlenderMCP addon surfaces several useful Blender tools: get_scene_info, get_object_info, get_viewport_screenshot, and execute_blender_code. This handful of commands alone considerably uplevels Claude's Blender power: now Claude can ideate scenes, execute Blender Python to generate scene data (meshes, materials, lighting, etc.), and inspect the state of the scene.

Blender MCP also surfaces some remote tools like PolyHaven and Sketchfab asset libraries, and Hyper3D, a paid service with an impressive asset generation model. But for the sake of learning and exercising my new GPU, I set about writing MCP tools to run additional generative models locally.

After 10 years of not developing on a Windows machine, I was pleased to learn that nowadays, you can easily run Linux VMs on Windows via WSL (Windows Subsystem for Linux). I was able to run VSCode (with the highly useful Blender extension) and Blender in Windows, but Claude Code and Python model inference scripts in Linux. This workflow is about 90% there, but there were some very subtle sharp knives to watch out for.

1. Windows Localhost Is Not Linux Localhost

Under normal operation, BlenderMCP listens to localhost:9876. Then your MCP client (e.g. Claude) will invoke tools by talking to a socket on localhost:9876 using uvx blender-mcp. However, if you run Blender in Windows and Claude in WSL, they do not have the same localhost IP address and will be unable to communicate. This should be configurable via the BLENDER_HOST param; I tried claude mcp add blender --env BLENDER_HOST=$(ip route | awk '/default/ { print $3 }') -- uvx blender-mcp but the environment variable didn't appear to be passed. So I hacked around this by replacing all instances of localhost with my WSL localhost, ip route | awk '/default/ { print $3 }', then called the locally changed MCP server via: claude mcp add blender -- python /mnt/c/Users/name/blender-mcp/src/blender_mcp/server.py

2. Struggles to Read Images

Claude Code supports image understanding, but it usually didn't work across WSL. WSL has a shared directory, known to WSL as /mnt/c/Users/$USER/ and to Windows as C:\Users\$USER\. So if I tried to drag an image from my Windows file browser into Claude Code, the Windows filepath was passed, and Claude couldn't read it using Linux file utilities.

For learning's sake, I wanted to finetune a base model to generate Blender code locally. I started with deepseek-coder-6.7b-base and was surprised by my initial interactions:

$ ollama run deepseek-coder:6.7b-base

>>> write a python function to reverse a string

.sample string: 1234abcdoutput :dcba

<jupyter_code>

def rev(s):

st=""

for i in s:

st =i+st #or st=i + str --by join method

return st

rev("1234abcd")

str[::-1]#--by slicing operator

s="1234abcd"

l=list(s)

l.reverse() # -- by list method

"".join(l)

#using while loop

def rev_whilwloop(string):

rstr = ""

length = len(string) - 1

while length >= 0:

rstr += string[length]

length -= 1

return rstr

rev_whilwloop("1234abcd")

<jupyter_output>

<empty_output>

The model generated various string reversal methods of varying quality, along with commentary, method calls, and unrelated tokens, rather than the neatly formatted chatbot output we're accustomed to. Let's unpack the model name to understand what's going on:

From this point, we have several high level control knobs:

I wrote a script that runs LORA on the base model, with a Claude-generated dataset of about 200 examples. Yes, I used an LLM to write a script to tune another LLM with LLM-generated data. Example data:

instruction: Create a cube in Blender using Python output: # Create a cube\nbpy.ops.mesh.primitive_cube_add(location=(0, 0, 0))

The model performance improved from 0% to about 50% accuracy after finetuning. Not good enough for production, but it felt impressive with such a small training set. Considering that prior to tuning, the model wouldn't even generate actual code for Blender themed prompts.

prompt: Add a black material to the selected object

pre-tuning response:

I would add a black material to this object, as it looks like there's not enough contrast between its color and background.

post-tuning response:

# Add black material to active object

if bpy.context.active_object and bpy.context.active_object.type == 'MESH':

mat = bpy.data.materials.new(name="BlackMaterial")

mat.use_nodes = True

principled = mat.node_tree.nodes.get('Principled BSDF')

if principled:

principled.inputs['Base Color'].default_value = (0, 0, 0, 1)

# Assign to object

if bpy.context.active_object.data.materials:

bpy.context.active_object.data.materials[0] = mat

else:

bpy.context.active_object.data.materials.append(mat)

After this exercise, I downloaded BlenderLLM, a more sophisticated tuning of Qwen2.5-Coder-7B-Instruct, then packaged it as a CLI tool using pipx, and wrote a NodeJS server to invoke it as an MCP tool:

// Handle tool calls

server.setRequestHandler(CallToolRequestSchema, async (request) => {

const { name, arguments: args } = request.params;

try {

let result;

switch (name) {

case "generate_blender_code":

// generateBlenderCode calls the inference CLI

result = await generateBlenderCode(args.prompt);

return {

content: [

{

type: "text",

text: result

}

]

};

...

The final agent didn't make much use of this. I suppose Claude Code is already good enough at generating Blender code and iterating on feedback that it doesn't need to delegate to an MCP tool.

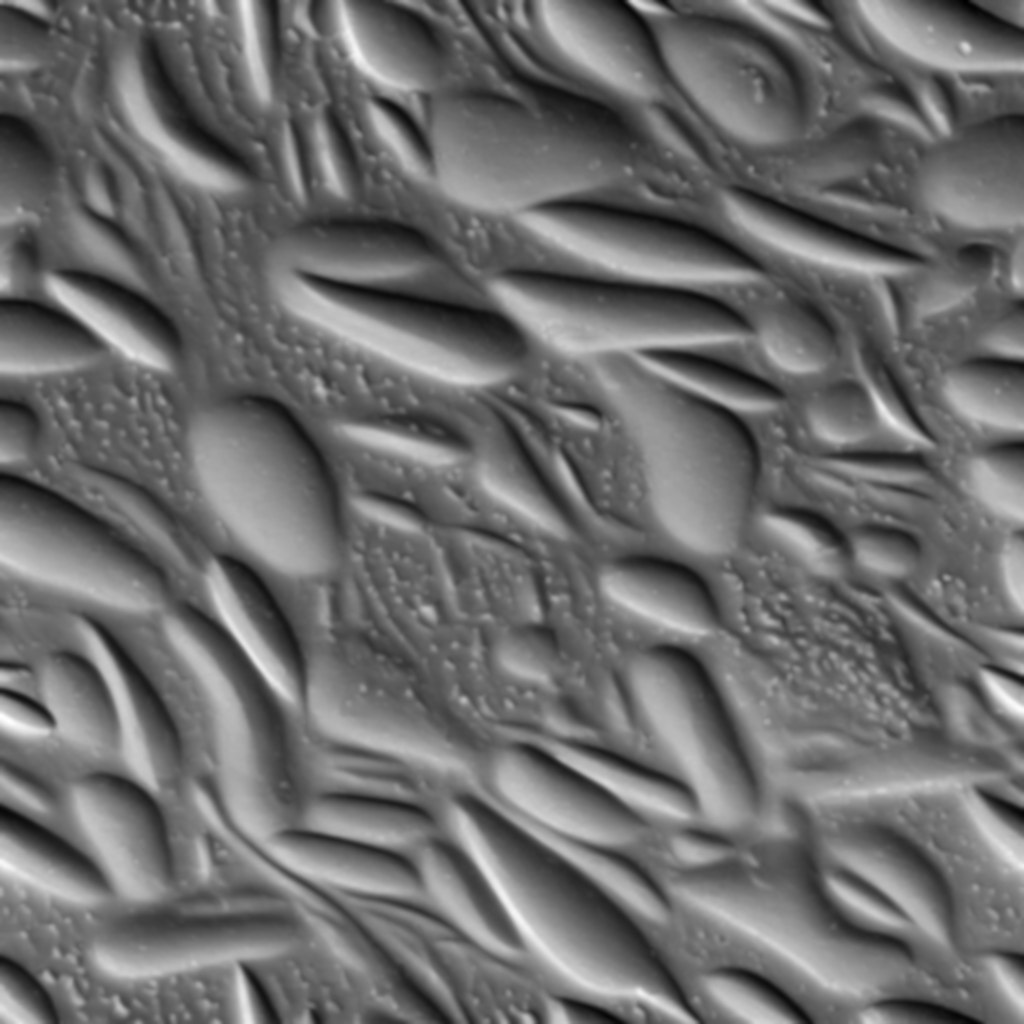

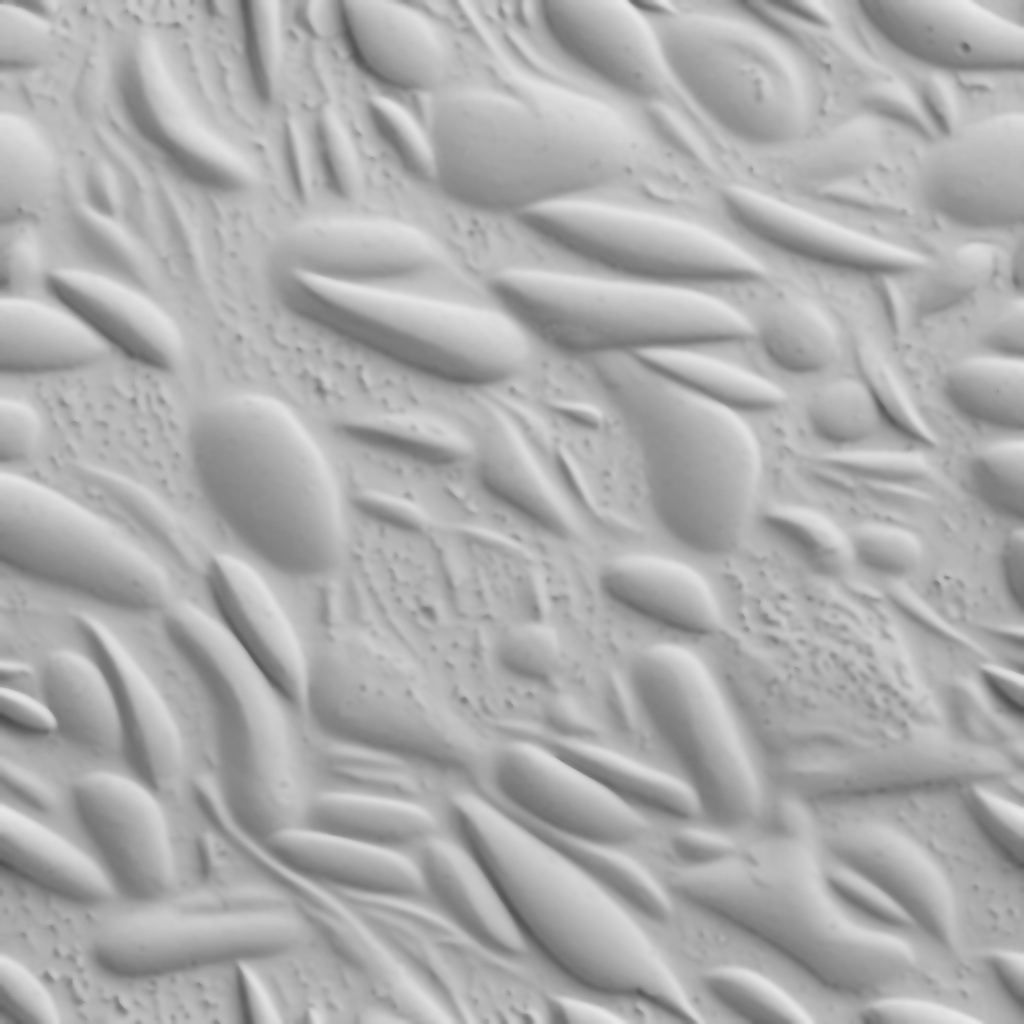

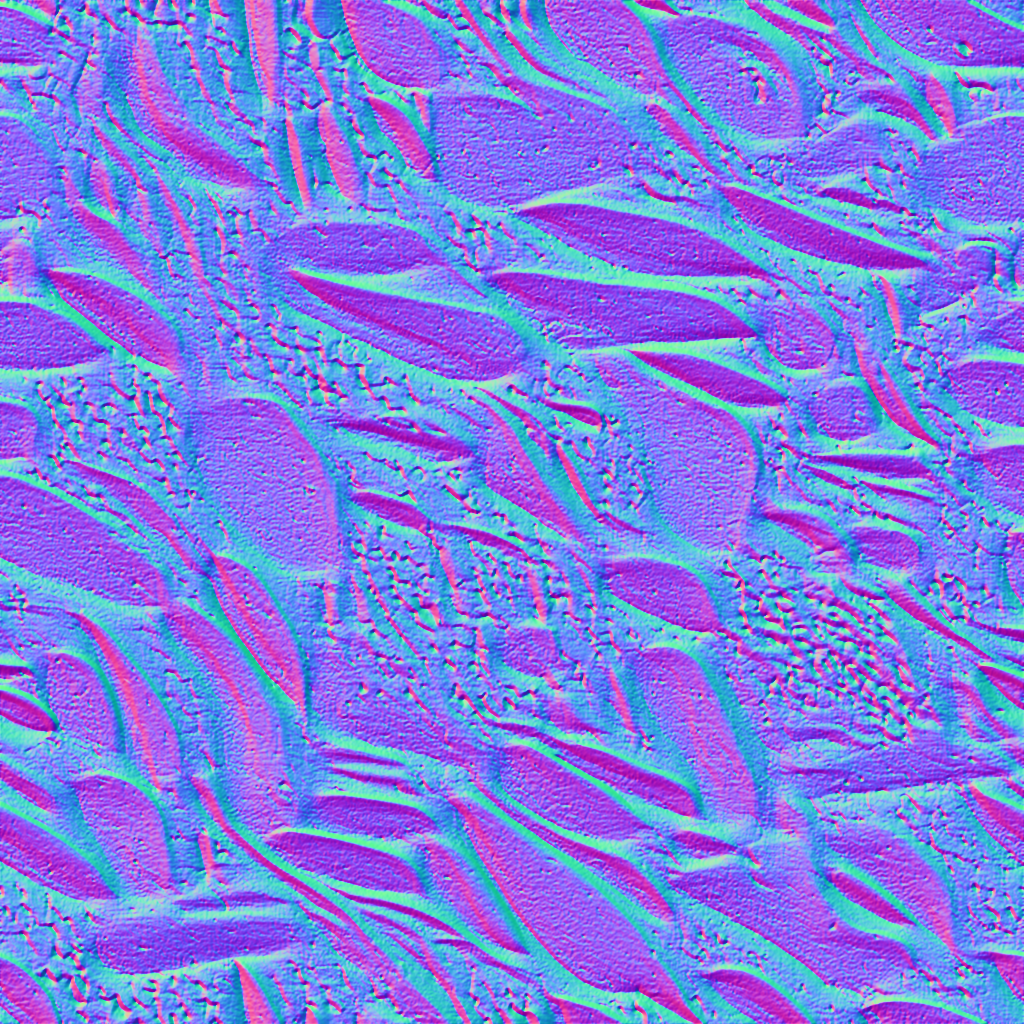

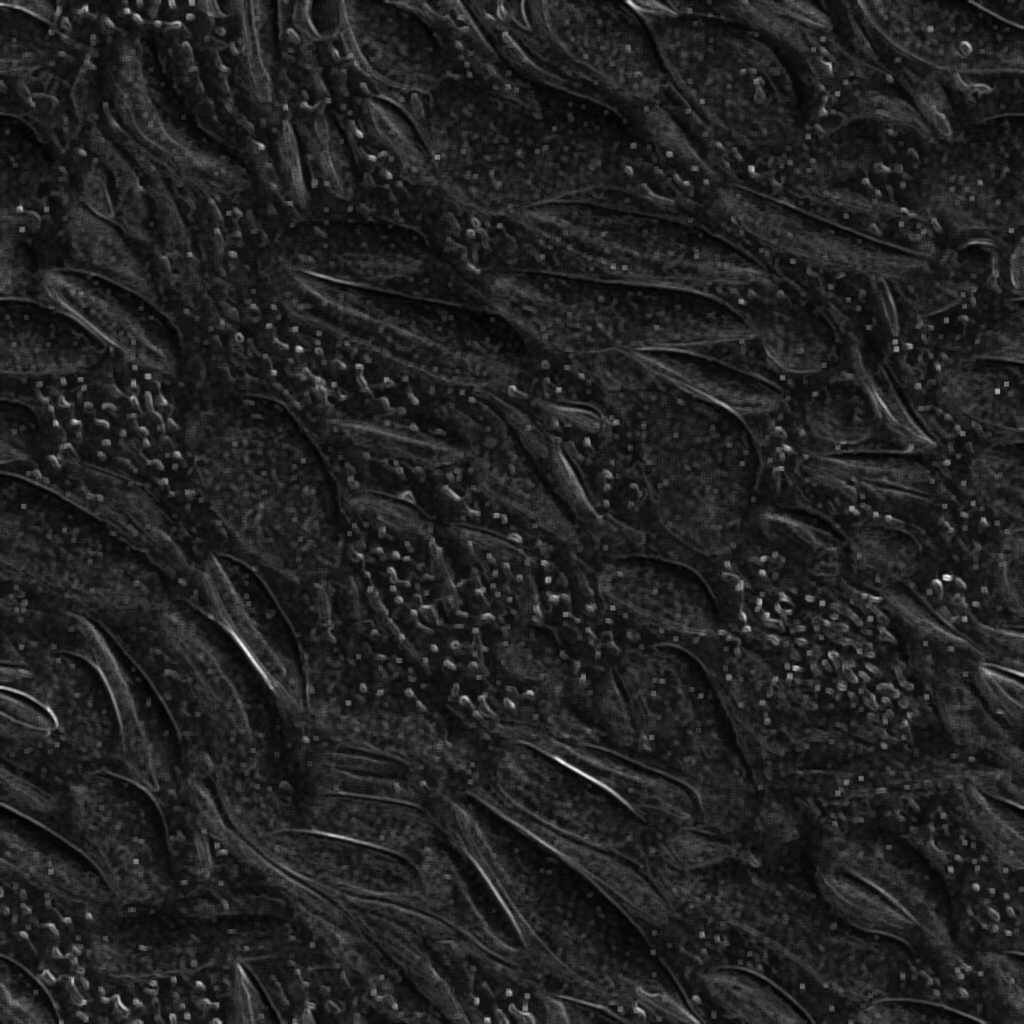

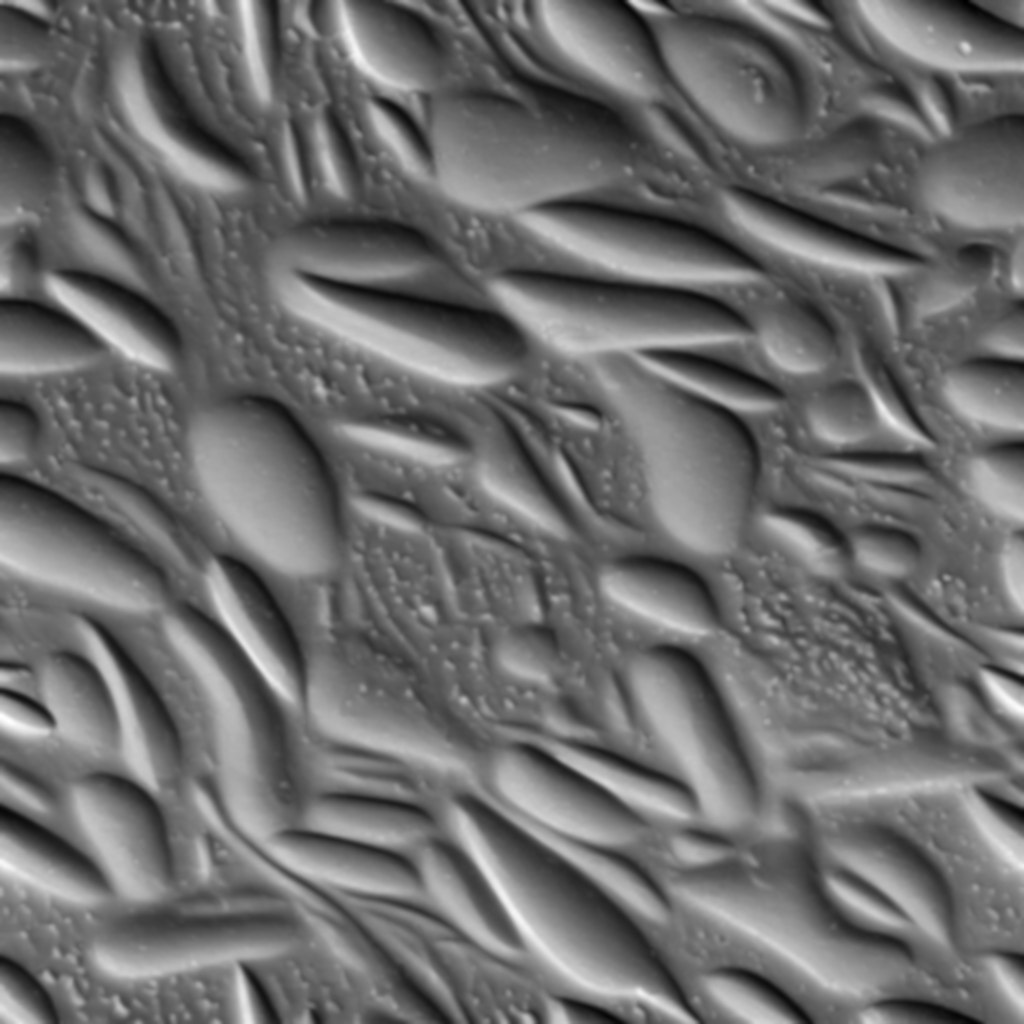

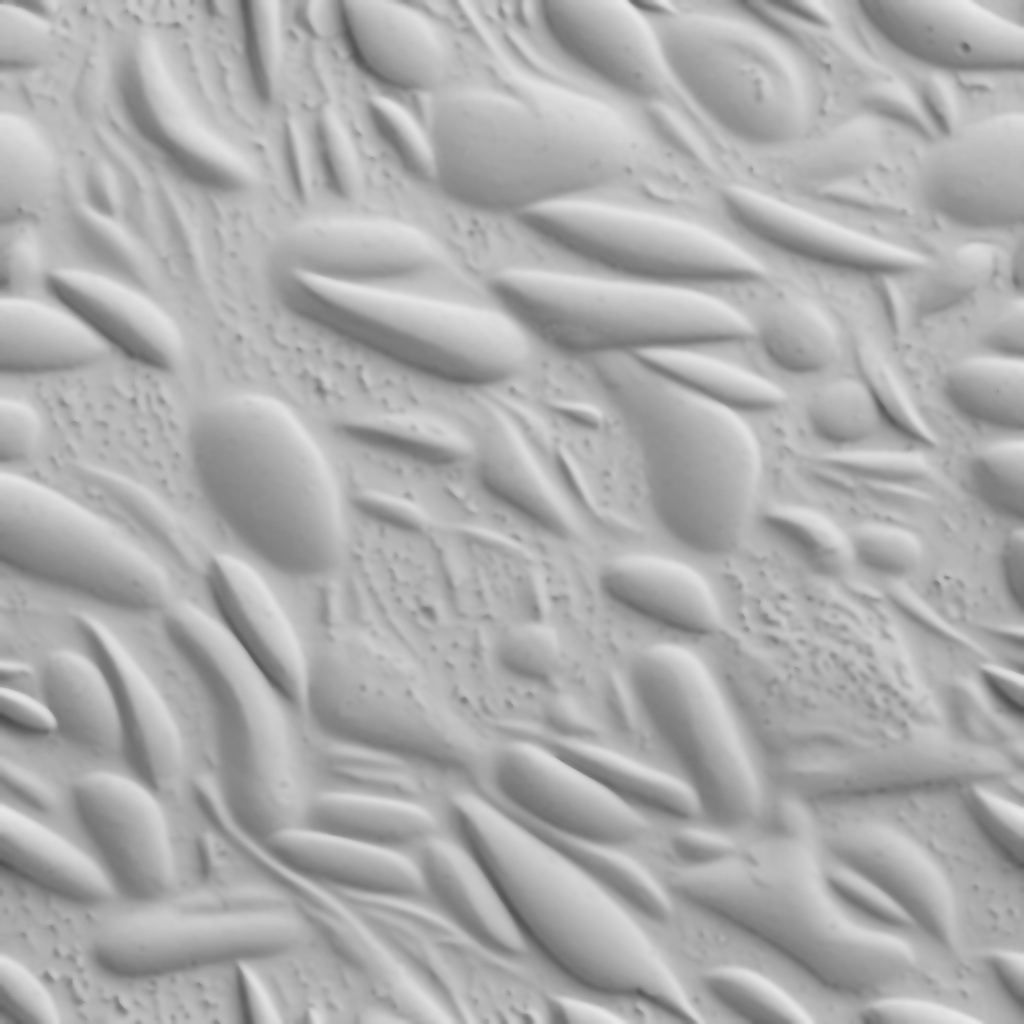

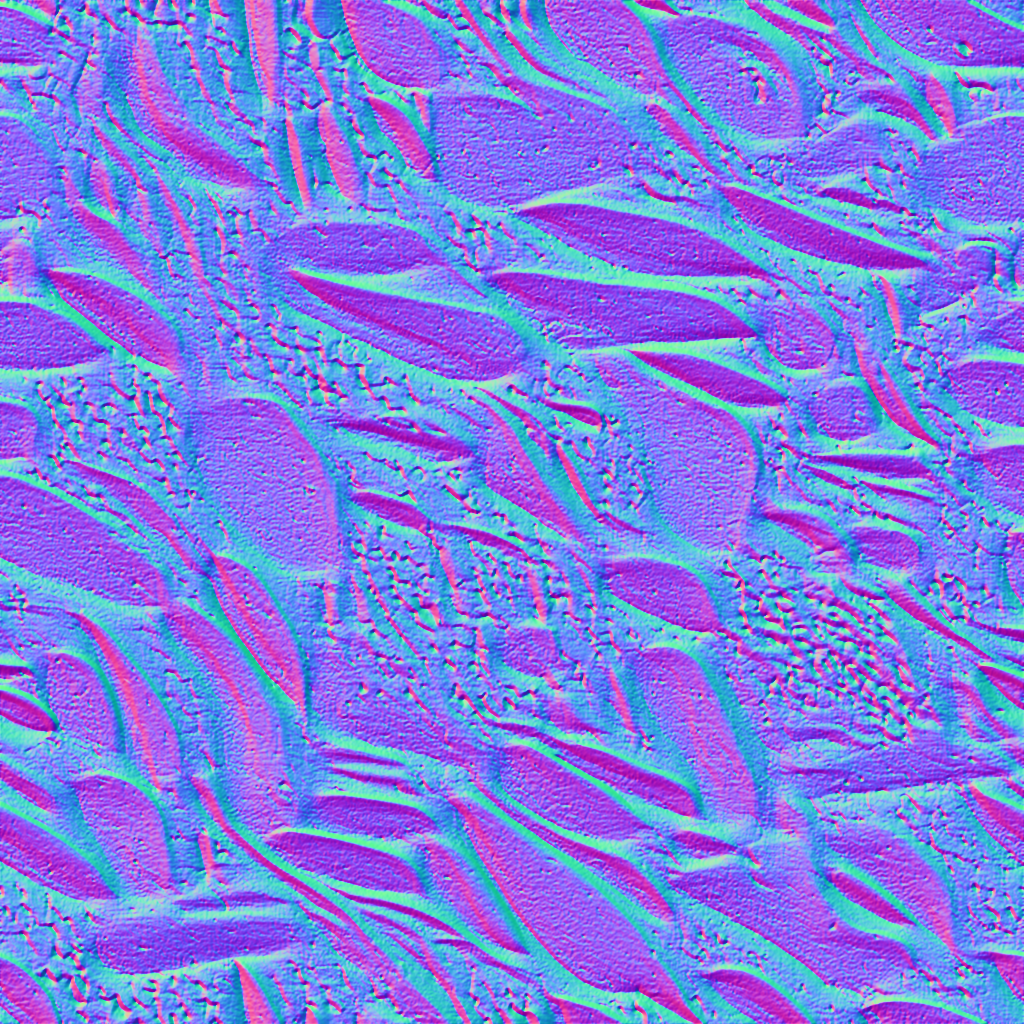

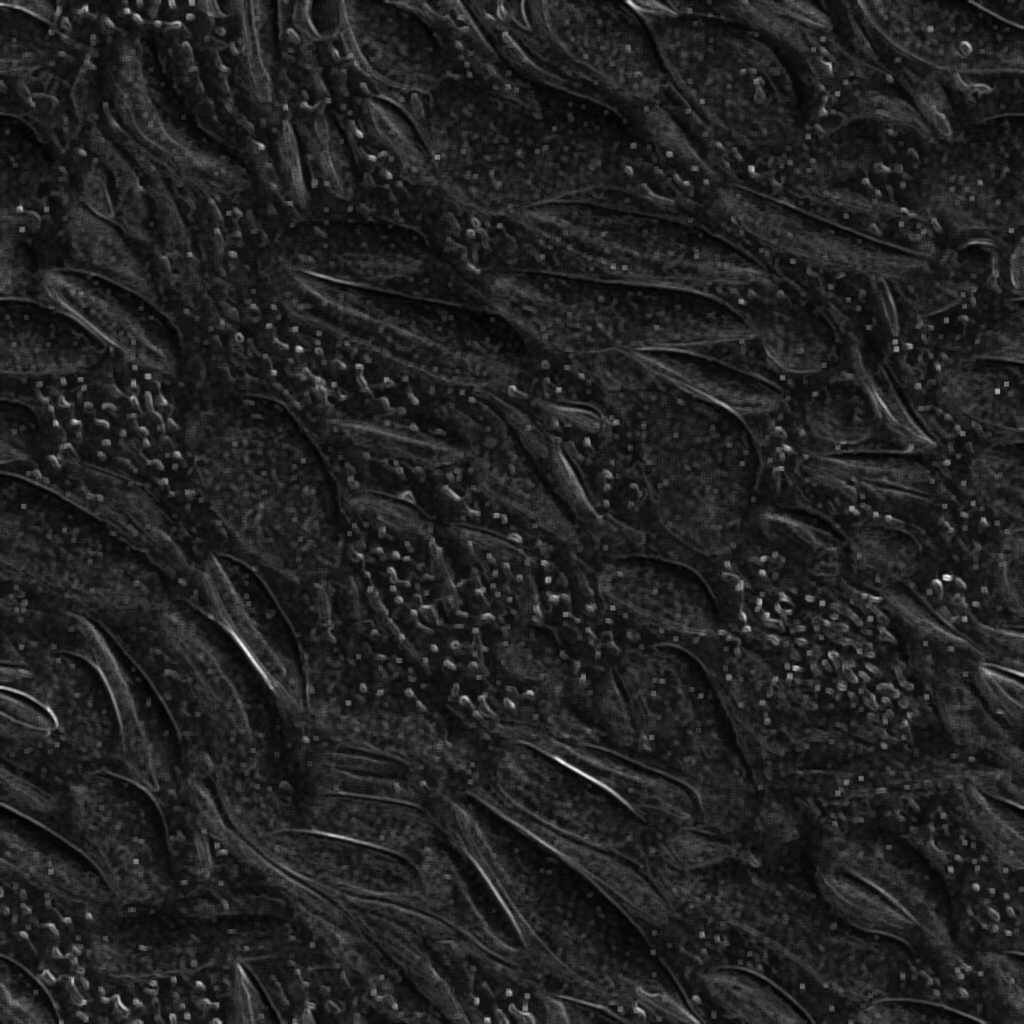

Following the above pattern, generate_image is a wrapper around an inference script running Stable Diffusion XL. I wanted to try fine tuning SDXL to be able to generate PBR (physically based rendering) textures: images that look like the base color image, but encode specific 3D information like height or normals.

I downloaded some textures from AmbientCG, but wasn't able to vibecode a LORA script for SDXL due to lack of familiarity with diffusion model training, so I went with a simpler approach inspired by this post: generate a color texture image, then use basic image processing to generate each PBR map. For example:

def generate_displacement(color_image):

"""

Generate Displacement map from color image.

Displacement represents surface height variations.

Uses luminance as a proxy for surface elevation.

"""

# Convert to luminance

luminance = rgb_to_luminance(color_image)

# Apply bilateral filter to smooth while preserving edges

displacement = cv2.bilateralFilter(luminance.astype(np.uint8), 9, 75, 75)

# Enhance height variation with gamma correction

displacement = displacement.astype(np.float32) / 255.0

displacement = np.power(displacement, 0.8) # Gamma < 1 enhances midtones

displacement = (displacement * 255).astype(np.uint8)

return displacement

This is a naive approach. It's pretty fake to guess at PBR textures from the color data, since the whole point of the PBR textures is to convey 3D information that can't be derived from the color data. There are models that can generate better PBR maps based on actual 3D knowledge within the network (such as ldm3d-4c for depth map generation or MeshGen), but this was good enough for prototyping a generate_texture tool.

I explored a few different approaches for generating mesh assets, for the prompt "3D model of a wooden hammer".

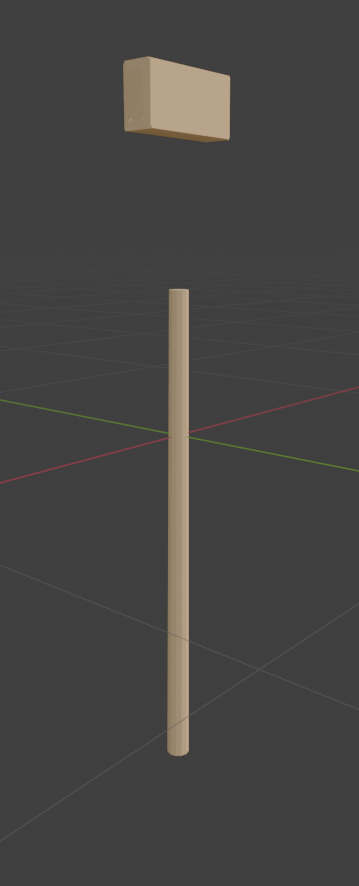

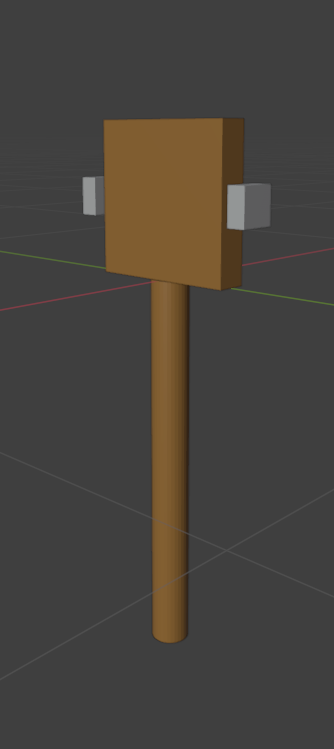

We can generate plain code for creating and manipulating meshes via sequential Blender API calls. On the left, Claude produced a mesh that looks somewhat like a hammer in 240 lines of code, and on the right, BlenderLLM produced a mesh that looks slightly more like a hammer, in a more succinct 40 lines of code.

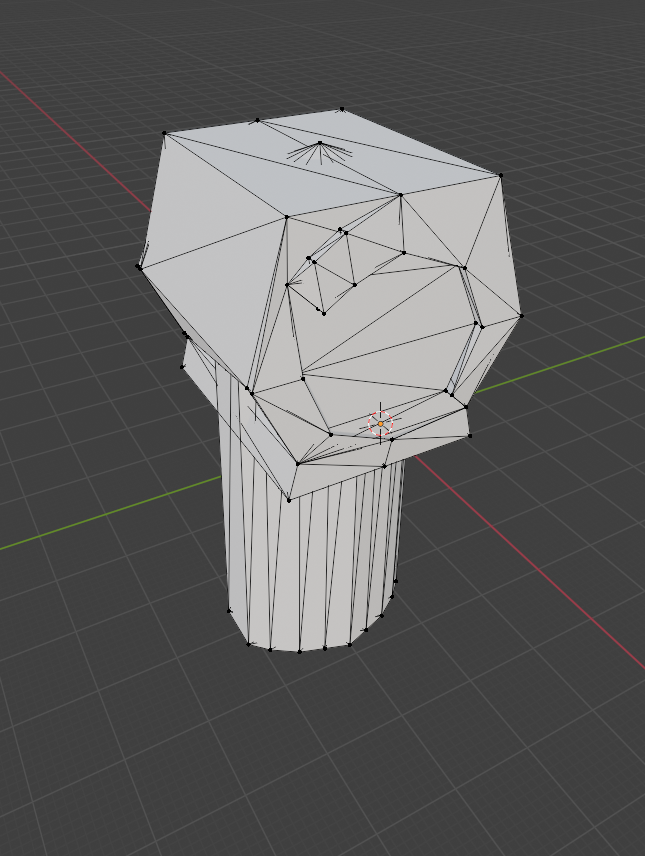

The LLaMA-Mesh approach is cool: this is a tuning of LLaMA, a language model. They're using a language model to generate vertices and faces, one token at a time, relying on spatial reasoning of the language model. The output is a valid OBJ file, thanks to that file format's simplicity:

# Simple cube OBJ file # 8 vertices for a unit cube v -0.5 -0.5 -0.5 v 0.5 -0.5 -0.5 v 0.5 0.5 -0.5 v -0.5 0.5 -0.5 v -0.5 -0.5 0.5 v 0.5 -0.5 0.5 v 0.5 0.5 0.5 v -0.5 0.5 0.5

The downside is that language models don't appear to have great spatial reasoning skills: the output topology is messy, and this doesn't appear to extend beyond simple meshes. It took almost an hour to do inference on my GPU (but since other tools have made practical use of this, there must have been a bug in my inference code).

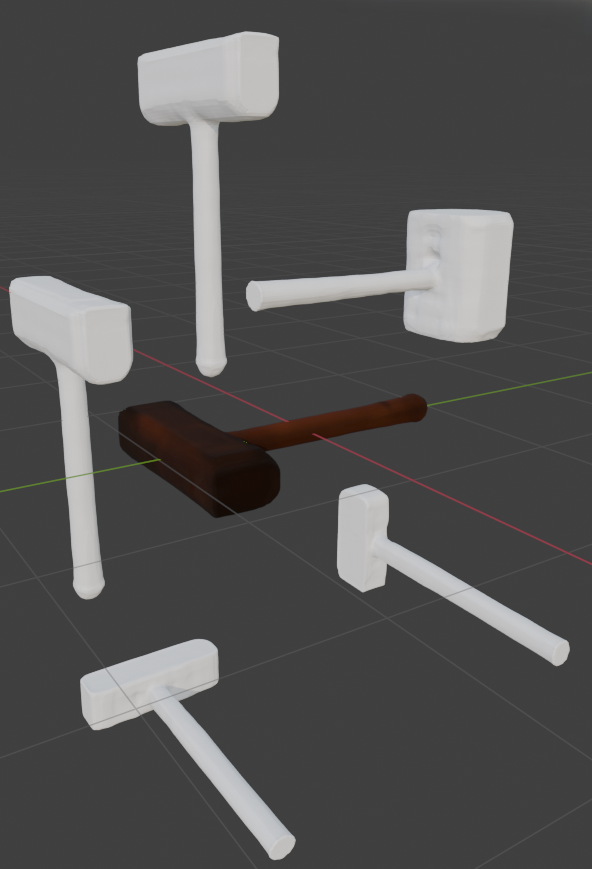

OpenAI's Shap-E consistently generated nice low-poly, reminiscent of old-school Runescape assets. This is the model I ultimately used for this MCP tool.

InstantMesh produces some beautiful results, though the output mesh frequently has holes not present in the input. This is a single image to 3D mesh model; the above example used this CC0 glazed porcelain hammer as input. I wanted to write a pipeline that converts text -> image via SDXL, then image -> mesh via InstantMesh. However, the dependencies were too messy for me to package this as a CLI script. I had to do a bit of patching just to get the basic demo working; the dependency nvdiffrast didn't support newer GPUs, so I forced the script to use earlier CUDA and GCC versions:

+#!/usr/bin/env python

+# Set environment variables BEFORE any imports

import os

+os.environ['TORCH_CUDA_ARCH_LIST'] = '8.9' # Force Ada Lovelace compatibility for Blackwell GPUs

+os.environ['CUDA_LAUNCH_BLOCKING'] = '1'

+os.environ['FORCE_CUDA'] = '1'

+# Try to use system GCC 11 if available, otherwise disable CUDA rendering

+import subprocess

+try:

+ result = subprocess.run(['gcc-11', '--version'], capture_output=True, text=True)

+ if result.returncode == 0:

+ os.environ['CC'] = 'gcc-11'

+ os.environ['CXX'] = 'g++-11'

+ print("Using GCC 11 for CUDA compatibility")

+ else:

+ raise FileNotFoundError

+except (FileNotFoundError, subprocess.SubprocessError):

+ print("WARNING: Compatible GCC version not found. nvdiffrast may fail to compile.")

+ print("Consider installing GCC 12: sudo apt install gcc-12 g++-12")

+ # Fall back to disabling CUDA extensions

+ os.environ['NVDIFFRAST_FORCE_OPENGL'] = '1'

+

import imageio

import numpy as np

import torch

+

+# Monkey patch PyTorch's CUDA capability detection for nvdiffrast compatibility

+def patched_get_device_capability(device=None):

+ """Return Ada Lovelace capability instead of Blackwell for nvdiffrast compatibility"""

+ return (8, 9) # Ada Lovelace instead of (12, 0) Blackwell

+

+if torch.cuda.is_available():

+ original_get_device_capability = torch.cuda.get_device_capability

+ torch.cuda.get_device_capability = patched_get_device_capability

+ print("Patched PyTorch CUDA capability detection for nvdiffrast compatibility")

I wrote a wrapper around Qwen2.5-VL-3B-Instruct, a popular model for computer vision. The hope was that the agent would run get_viewport_screenshot, then describe_scene to understand qualitatively what the scene looks like, and adapt based on feedback. But the feedback from describe_scene isn't detailed enough to be actionable. The agent already knows "I'm building a desert scene in Blender", and all describe_scene says, "This looks like a screenshot from Blender depicting a desert scene".

I use Claude's agent generation CLI, then added an addendum to the generated prompt with details on tool use:

Tool Use:

- If you want to generate a simple mesh, execute Blender code to achieve this. If you want to generate a more complex mesh, invoke generate_lores_mesh and then import the resulting file into the Blender scene and place it as you wish. The lores meshes do have color data, so you must plug the "Color Attribute" node with the value "Color" (NOT the "Attribute" node) into the Base Color on these meshes. An imported mesh will have its center of mass located at Z = 0, so you ought to move these up so they don't clip with the ground.

- If you want to create an interesting distant background, invoke generate_image and place the resulting image at an appropriate place in the scene. I recommend that you import background and distant midground images as planes and orient them facing the camera to achieve the illusion of depth.

- If you want to add a texture on an object, invoke generate_image, then invoke generate texture to produce PBR texture images, then create a material that loads them into Blender. This is mandatory for objects that should have a detailed repeating texture, like rocks, sand, stone walls, and grass.

- If you want to understand the current state of the scene, invoke get_viewport_screenshot, then call describe_scene with the resulting image filepath. Please do this at the very end so that you can tell me what the computer vision says about the scene.

0:00

initial prompt

ask the blender agent to create a scene set in a desert nomad's camp. there are men gathered around a well, drinking tea. there are animals grazing on scant pasture, like goats, and camels, and there are horses as well. there are objects of varying height that create a sense of depth in the scene, such as tents, rugged topography, and occasional plants. there are sand dunes in the mid ground and distant mountains in the background.

0:23

Agent blocked out the scene some initial meshes and a background plane.

0:38

Agent added generated assets of a tent and a man, and duplicated them, but the outputs of the mesh generator are oriented facing down, so the objects are partially submerged. I could fix this specific bug and correct the orientation in the Shap-E script, but in general, this agent doesn't know when a mesh is facing the wrong way, or is clipping another mesh.

1:32

Agent generates a sand texture with generate_image and generate_texture. I'm pleased with the visual quality for something that was autogenerated.

1:45

Agent tries to setup camera and Blender crashes. This was the first Blender crash I saw across 5 runs of the agent, but I kept it in the demo to show how the agent resumes after failure. I manually restarted Blender, resumed listening to the MCP server, and reprompted the agent. Sadly, it looks like we lost the sand texture here.

2:51

Agent adds some code-generated horses, seemingly unware it's already added mesh-generated horses.

3:17

Agent tried modeling some desert plants and is now in edit mode. It tries to add lighting, but Blender is context specific so this isn't a valid operation in edit mode.

3:42

Agent adds materials to everything.

3:46

Agent is done, now I inspect its work. I tell it to fix some materials it overwrote, and to correct the direction of the imported meshes.

4:31

I tell the agent to apply another fix on the material nodes.

I learned a lot from this project, and am eager to try the following next: